Read Transcript EXPAND

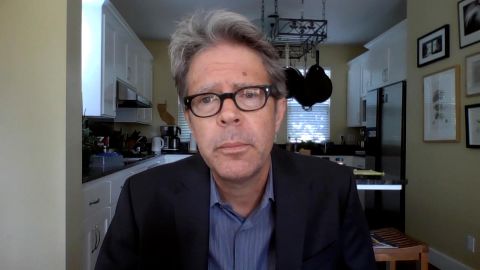

SHEERA FRENKEL, CO-AUTHOR, “AN UGLY TRUTH: INSIDE FACEBOOK’S BATTLE FOR DOMINATION”: This whistle-blower is incredibly well-spoken and versed in Facebook’s algorithms, which I think are difficult for people to understand just how crucial a role they play in promoting hate speech and misinformation. She did the equivalent of coming with the receipts of many of the sort of themes that we had in our book, which is that Facebook constantly makes decisions which promote its business and keep people focused on Facebook and online on Facebook as much as possible, rather than reducing the hate speech and misinformation that we currently have in our media ecosystem.

BIANNA GOLODRYGA: It’s becoming much harder to sort of break down where things stand, because, on the one hand, you have Facebook saying that they’re doing everything in their power to take down hate speech, while, as you and your co-author have pointed out, we don’t really know the denominator. So we don’t know, out of how many posts have they actually been taking hate speech down? Now, though, it does seem especially with news of this algorithm, that they may have, in fact, wanted to promote some of it, if for no other reason than to keep viewers’ attention the app.

FRENKEL: Well, I think one of those damning sort of documents that she released, she revealed in these Facebook files was that Facebook executives are constantly given research which shows that they can be tweaking the algorithm to de-emphasize or they call it downrank hate speech and misinformation. And they’re often given, let’s say, five or six options of ways to downrank it. And they’re told, look, if you take the most extreme option, we will effectively really reduce hate speech and misinformation on the platform, but we’re also going to reduce engagement. We’re going to reduce the amount of time people spend on Facebook. And executives regularly, time and time again, make the call not to take that most extreme option, to take often a middle-of-the-road option, because they don’t want to sacrifice people’s attention. They don’t want to sacrifice the amount of time that people spend on Facebook, and, therefore, the amount of money that they make as a company.

GOLODRYGA: Which is why Haugen accused them of putting profits over user safety. She talks about the January 6 insurrection as it relates to this civic integrity unit that was set up leading up to the 2020 election that she outlines did a number of correct things to tone down the rhetoric and avoid sort of more uproar leading up to the election. But then, just as quickly as they put that unit up, they took it down right after the election. And, of course, we know what transpired just a few months later. Why, in your opinion, given all the work that you have done researching for this book, would they have taken that down so quickly?

FRENKEL: Well, I mean, it goes to what we said in — just moments ago, which is that it wasn’t really good for their business.

About This Episode EXPAND

Gerard Ryle; Sheera Frenkel; Jonathan Franzen; Kate Bowler

LEARN MORE